|

I am a PhD student at Auburn University, Alabama, US where I am working on XAI in multimodalities. I am also passionate about Embodied AI where an AI can have the ability to understand not only language but also other different modalities such as vision, sound. At Auburn University, I've been working under the supervision of Professor Anh Nguyen. ngthanhtinqn@gmail.com / CV / Blog / Google Scholar / ResearchGate / Short Science / Notion / Twitter / Github / Coding / Category: Latest New / Research Interest / Experience / Publications / Projects / Talks / Academic Service |

|

|

2024March 13, 2024, One paper got accepted @ NAACL 2024 Findings. 2023Jul 4, 2023, One paper got accepted @ Knowledge-based Systems (IF: 8.8). 2022Nov 1, 2022, One paper got accepted @ Expert Systems with Applications (IF: 10.35). |

|

|

|

I did my Master at Sejong University, Seoul, Korea on Aug 2022. In this period, I was working on Deep Reinforcement Learning for Instruction Following Navigation with Professor Yong-Guk Kim. I did my Bachelors at the Vietnam National University, The University of Science, Ho Chi Minh City on Aug 2019. My thesis was about Robot Localization and Object Tracking with Professor Ly Quoc Ngoc. |

|

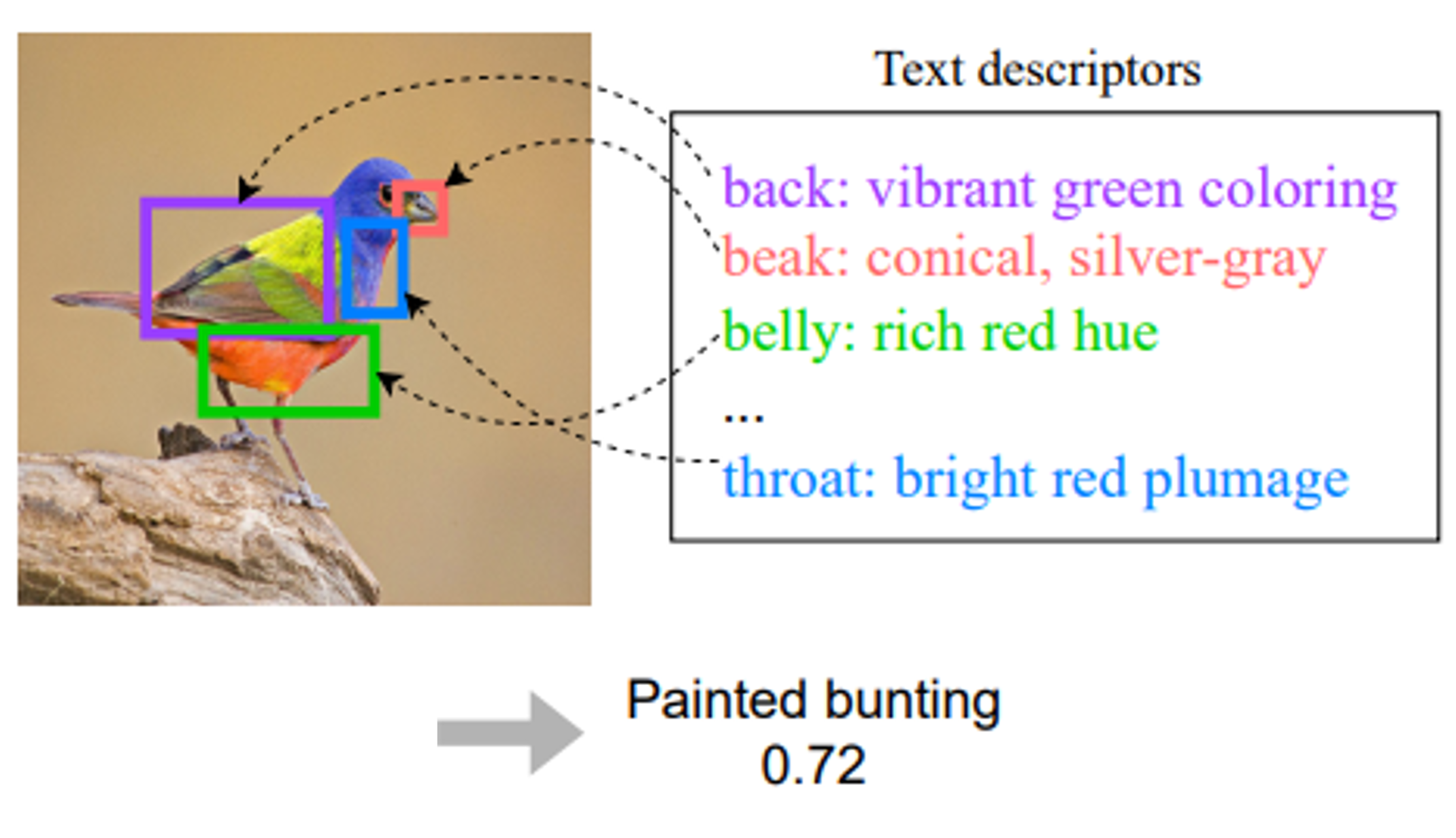

Thang Pham, Peijie Chen, Tin Nguyen, Seunghyun Yoon, Trung Bui, Anh Nguyen NAACL, 2024 Findings Code / Paper We proposed a part-based bird classifier that makes predictions based on part-wise descriptions. Our method directly utilizes human-provided descriptions (in this work, from GPT4). It outperforms CLIP and M&V by 10 points in CUB and 28 points in NABirds. |

|

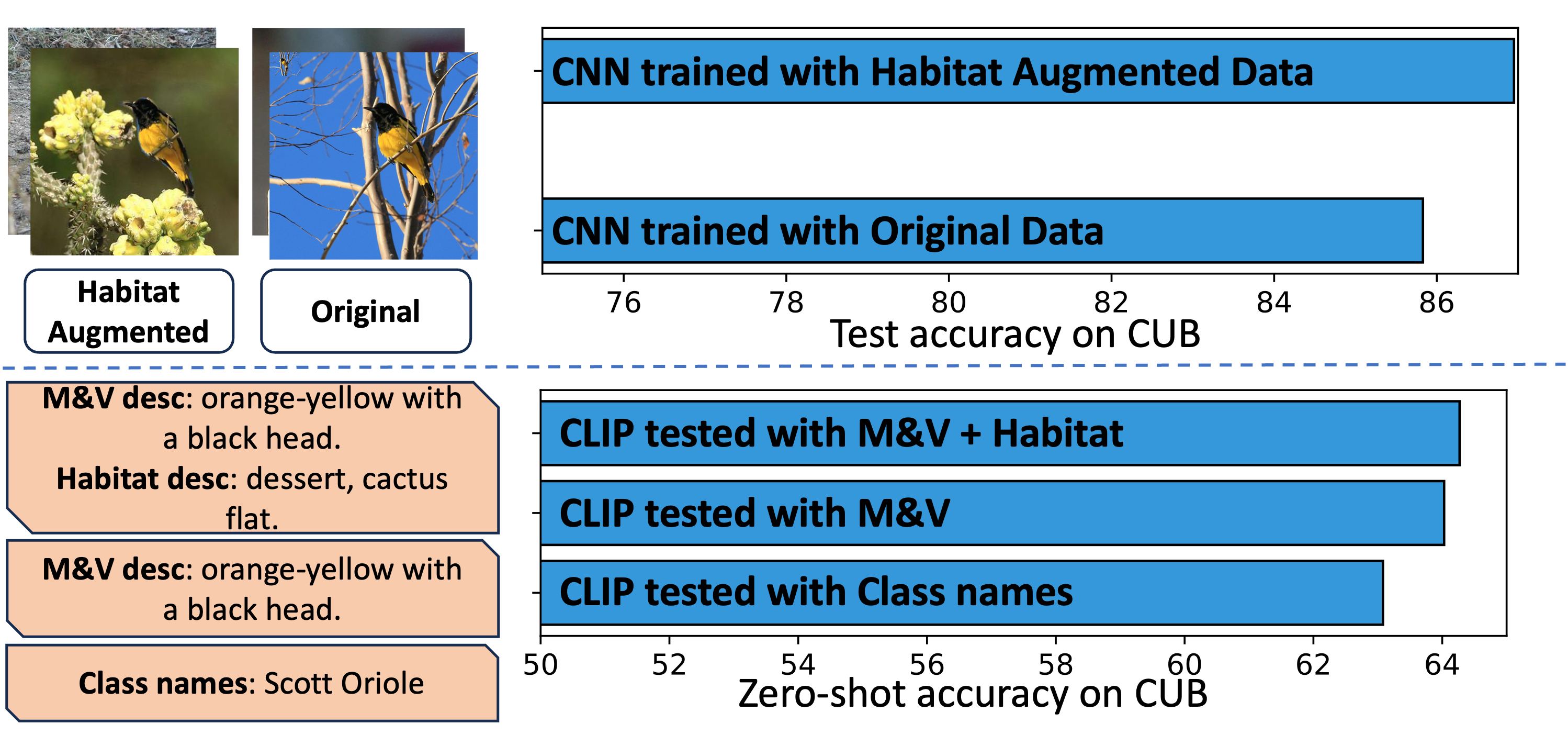

Tin Nguyen, Anh Nguyen ArXiv Preprint, 2023 Code / Paper We are the first to explore integrating habitat information, one of the four major cues for identifying birds by ornithologists, into modern bird classifiers. |

|

Thanh Tin Nguyen, Marvin, Yong-Guk Kim ArXiv Preprint, 2024 Project Page / Code / Paper This study proposes an attention-based module for analyzing the sentiment and emotion of memes. |

|

Thanh Tin Nguyen, Anh H. Vo, Soo-Mi Choi, Yong-Guk Kim Knowledge-based Systems (KBS), Jul 4, 2023 Video / Code / Paper This study proposes a coarse-to-fine fusion module between vision and language. This will help an agent learn a joint representation while navigating in a virtual environment. |

|

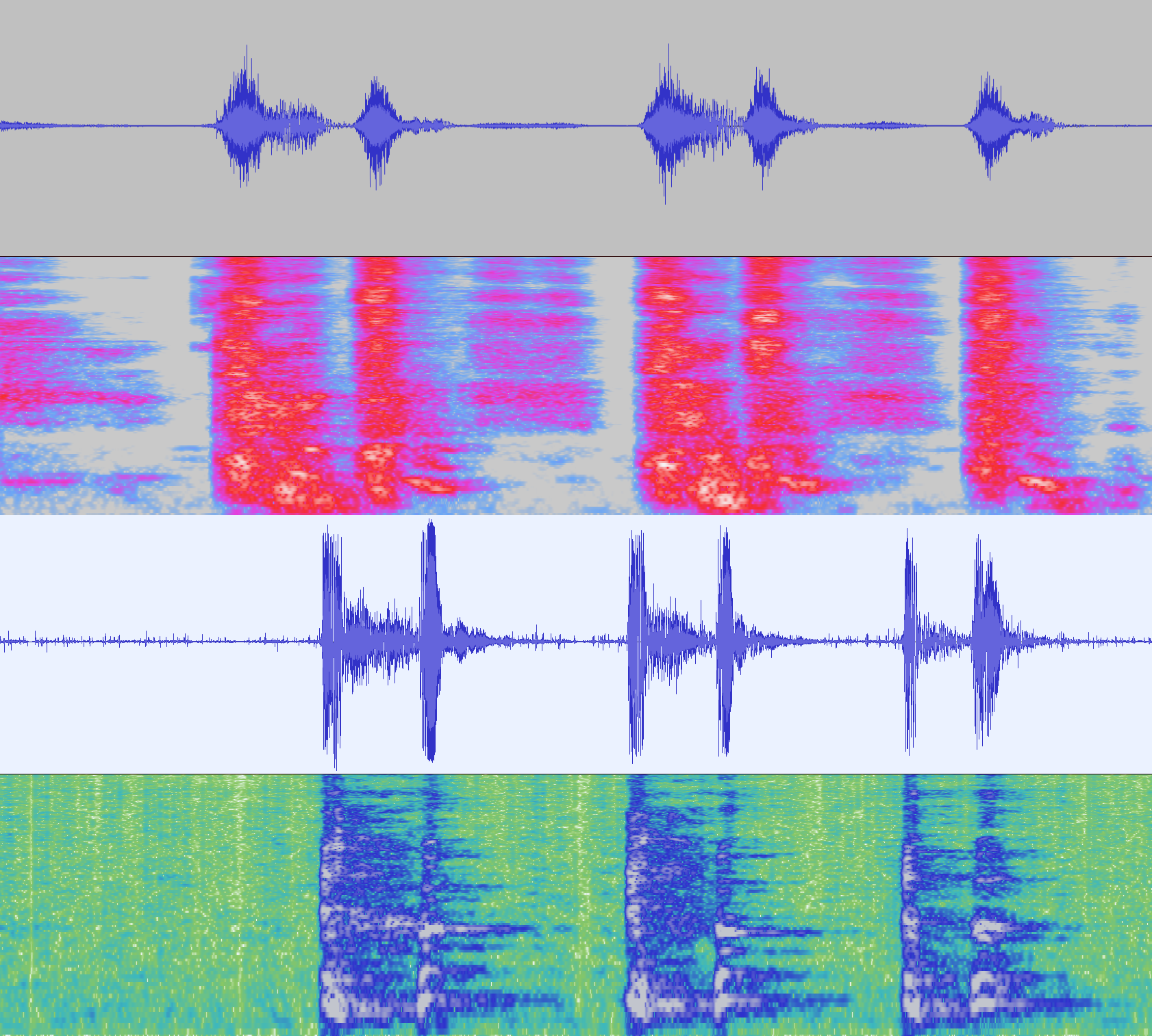

Long H. Nguyen, Nhat Truong Pham, Van Huong Do, Liu Tai Nguyen, Thanh Tin Nguyen, Van Dung Do, Hai Nguyen, Ngoc Duy Nguyen (Challenge 1st) Expert System with Applications (ESWA), 1, November, 2022 Link Challenge / Paper Introducing Fruit-CoV, a two-stage vision framework, which is capable of detecting SARS-CoV-2 infections through recorded cough sounds. In this challenge, we won 100mil VND (~ $4275) for the 1st place. |

|

Nguyen Thanh Tin, Ly Quoc Ngoc, Le Bao Tuan International Journal of Advanced Computer Science and Applications (SAI), 14, April, 2020 Video 1 / Video 2 / Video 3 / Paper Applying Yolo3 to Particle Filter to enhance its speed and accuracy, furthermore, an end-to-end localization framework using a stereo camera and IMU in the unknown environment. |

|

Nguyen Thanh Tin, Nhat Truong Pham, Yong-Guk Kim, et. al,. (Workshop) First Workshop on Multimodal Fact-Checking and Hate Speech Detection AAAI 2022, 2021 Paper / Link Challenge / Project Page / Code Achieved 1st on the public leaderboard, applying SAN, multihop, CNNRoBerta as multimodalities, and Only Text and Image as Single modalities. |

|

Nguyen Thanh Tin (Workshop 3rd and) VLSP - vieCap4H Challenge: Automatic image caption generation for healthcare domains in Vietnamese (Oral presentation), 25, October, 2021 (VNU Journal of Science (JCSCE)) vieCap4H - VLSP 2021: Vietnamese Image Captioning for Healthcare Domain using Swin Transformer and Attention-based LSTM, 5, May, 2022 Paper / Link Challenge / Project Page / Code [Most interesting discussed idea award] Achieved 3rd on the private leaderboard, applying Swin Transformer as the Encoder (and other types), and LSTM Attention as the Decoder. Choosen to be in the Special Issue of VNU Journal of Science (JCSCE) |

|

Nguyen Thanh Tin Project Page / Code Implement by C++. Project using Background Subtraction Method, Kalman Filter, and Hungary Algorithm. |

|

Nguyen Thanh Tin Project Page / Code Multi-Agent (2 robots) for Instruction Following Navigation task. |

|

|

|

|

|

Nguyen Thanh Tin |